Subsets

In Hold'em poker, there's a total of 1755 possible unique flops.

When creating a database for different flops, ideally, one would extensively need to include every single one of these flops.

The disadvantage here would be that processing a database of 1755 flops would require considerable computation time.

In order to get around this, GTO+ offers several standard weighted subsets.

These subsets are smaller sets of flops that represent the total possible number of 1755 flops as closely as possible.

Currently subsets are available for the following numbers of flops: 15,19,23,33,37,44,55,66,80,89,111,129,141,163.

Performance of the subsets

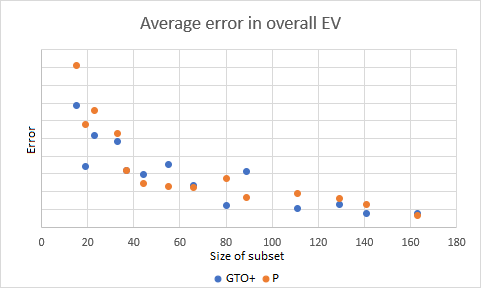

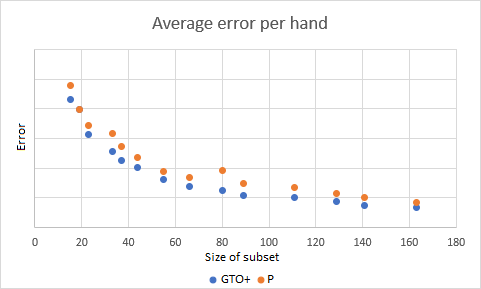

The subsets have been designed to be of the highest possible quality. Although we can not say for certain if significant improvement is possible, we believe that, within reason, our subsets are close to optimal. In order to test our subsets we have created several different test cases. These were for top 30% vs top 30%, top 30% vs bottom 52% and bottom 37% vs bottom 52%. For each test case 4 different extensive databases of all 1755 flops were created, one for a different stack-to-pot ratio, ranging from shallow to deep. This comes down to 12 different test files for each subset. See below a plot of the average error per hand versus the size of the subset. Roughly this error will scale as f(x) = 1 / x, or in other words, a subset twice as large will lead to an error twice as small. We were in the fortunate position to have subsets available from a third party (the name starts with a p), against which we were able to compare our own. In the situations that we tested for, GTO+ seems to outperform the third party slightly, but consistently. A similar, but slightly more cloudy image can be seen when looking at the overall EV for both players, averaged over the 12 databases for each set.

A similar, but slightly more cloudy image can be seen when looking at the overall EV for both players, averaged over the 12 databases for each set.In this case GTO+ is better 9 times and worse 5 times.